I studied Software Engineering in college. Most of the major classes were about various software topics, of course, but I was also required to take a couple of hardware/electronics classes where we soldered together transistors and logic gates and such things. At the time I didn’t particularly enjoy it – software was logical and always worked the same way while hardware was messy and was constantly not working due to bad solder joints or “letting the magic smoke out” or what have you. Very frustrating.

Fast forward 20 years and I’ve been doing the software thing for a long time. I’m still passionate about programming and love what I do but I was looking for something new to spend hobby time on. Preferably something relatively cheap, that I could combine with my interest in amateur science, and that would bring me back to a state of “beginner mind” where I could have fun learning again. I decided to turn my attention back to the world of electronics to see if it would “take” any better the second time around.

It turns out that the world of hobby electronics is currently in a golden age and it’s amazing! Between the ocean of free information available on the internet, the fantastic companies that cater to hobbyists, and the wide availability of low-cost, high-quality tools and equipment, there’s never been a better time to get into the hobby.

So you want to hack some hardware?

I’ve been working with this new hobby for about a year now and while I’m certainly no expert, I thought I’d share the results of some of my research to maybe save others some time and to “give back” to the internet community that’s helped me so much. This post will be a high-level overview of some of the interesting directions in which one can explore, and resources I’ve found to be the most useful. It’s adapted from a talk I did for the South Sound Developers User Group earlier this year.

As a software developer, it’s pretty easy to ease into the electronics field, starting with high-level things that are comfortably familiar and working your way down to raw electronics with transistors and opamps and stuff. There are a huge array of options available and I can’t possibly cover them all, so I’ll just describe the journey I took and the results I got.

Microprocessor boards

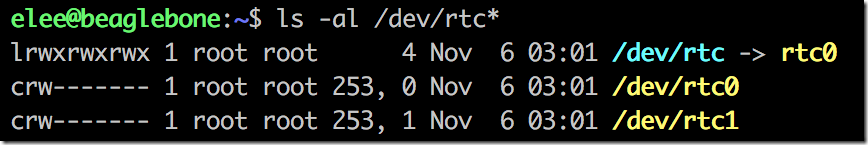

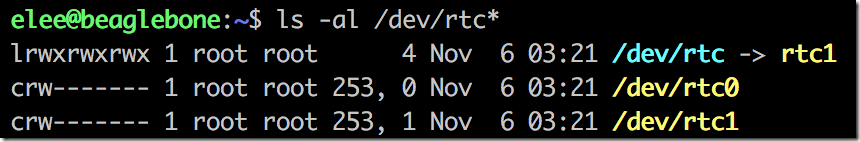

Probably the easiest place to start is with one of the many types of small microprocessor boards available today. Two of the most popular right now are the Raspberry Pi and the BeagleBone Black. These are full self-contained computers on a single small board, usually running some kind of Linux-based OS on an ARM processor. The fact that they’re small and low-power is nice, I guess, but the really interesting thing about them is that they usually include a number of general purpose I/O pins that you can hook up to external electronic circuits in order to control them or to acquire data from them. These GPIO pins aren’t tied to any kind of complex protocol like USB or even serial; instead you can directly set each output pin on or off, or check the current state of each input pin. This allows you to do something trivial like blink an LED on and off, or things that are far more complex.

![product_detail_black_sm[1] product_detail_black_sm[1]](https://saintgimp.files.wordpress.com/2014/07/product_detail_black_sm1_thumb.jpg?w=240&h=194)

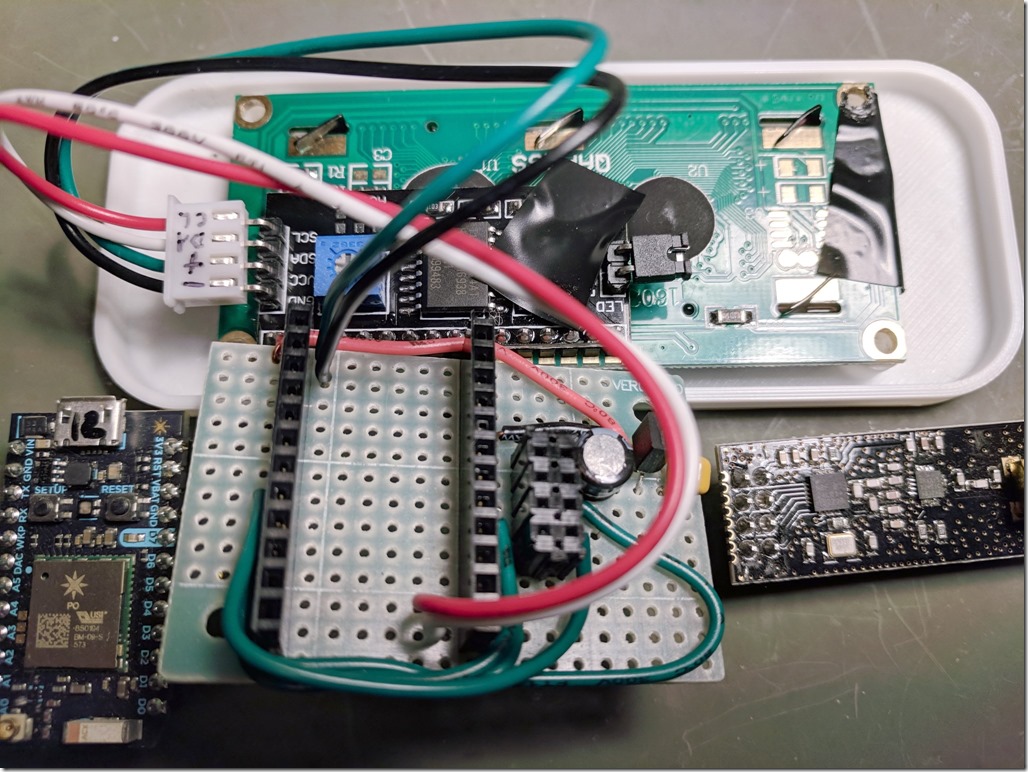

One of my first electronics purchases was a BeagleBone Black and I’m very happy with it. It has enough processing power to run Linux, lets me program in my language of choice (Python, Javascript, C, etc.), and is easy to hook up to my home network and thus to the internet. It has a wide array of GPIO pins, both digital and analog, and also has USB and HDMI ports to connect a monitor and keyboard if desired (though I usually just SSH into it).

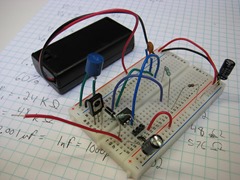

I bought the BeagleBone Black along with an inexpensive beginner’s electronics parts kit (see below) and had a lot of fun wiring up LEDs, switches, and other components on a breadboard and connecting them to the BeagleBone. I refreshed my memory about the basics of simple electronic circuits but soon found myself wanting to go a bit more low-level. After all, the BeagleBone Black wasn’t too much different than the laptop I work on every day and I wanted something a little more different than my day job.

Microcontroller boards

Having the power of a full Linux OS is nice but I wanted to get a little closer to the hardware. I picked up an Arduino Uno, which is a microcontroller board. Like the BeagleBone Black, the Arduino has several digital and analog GPIO pins which which to interface with external electronic circuits. However, unlike microprocessor boards, the hardware resources available on a microcontroller board are much more limited. The Arduino Uno has only 32 KB of storage and 2 KB of RAM, so you aren’t going to be running Linux on this thing. In fact, you don’t have any kind of OS available at all.

Instead, you write self-contained programs which are uploaded to the board over USB and get directly executed by the microcontroller. There’s no OS to get in the way; your program is the only code running and you have full control over and responsibility for everything that happens. This is simultaneously limiting (no networking, video, or keyboard/mouse supported out of the box) and also liberating because your code executes predictably in real time without being preempted by other threads or processes. This allows you to do things that require precise timing control such as operating servo motors for robotics projects.

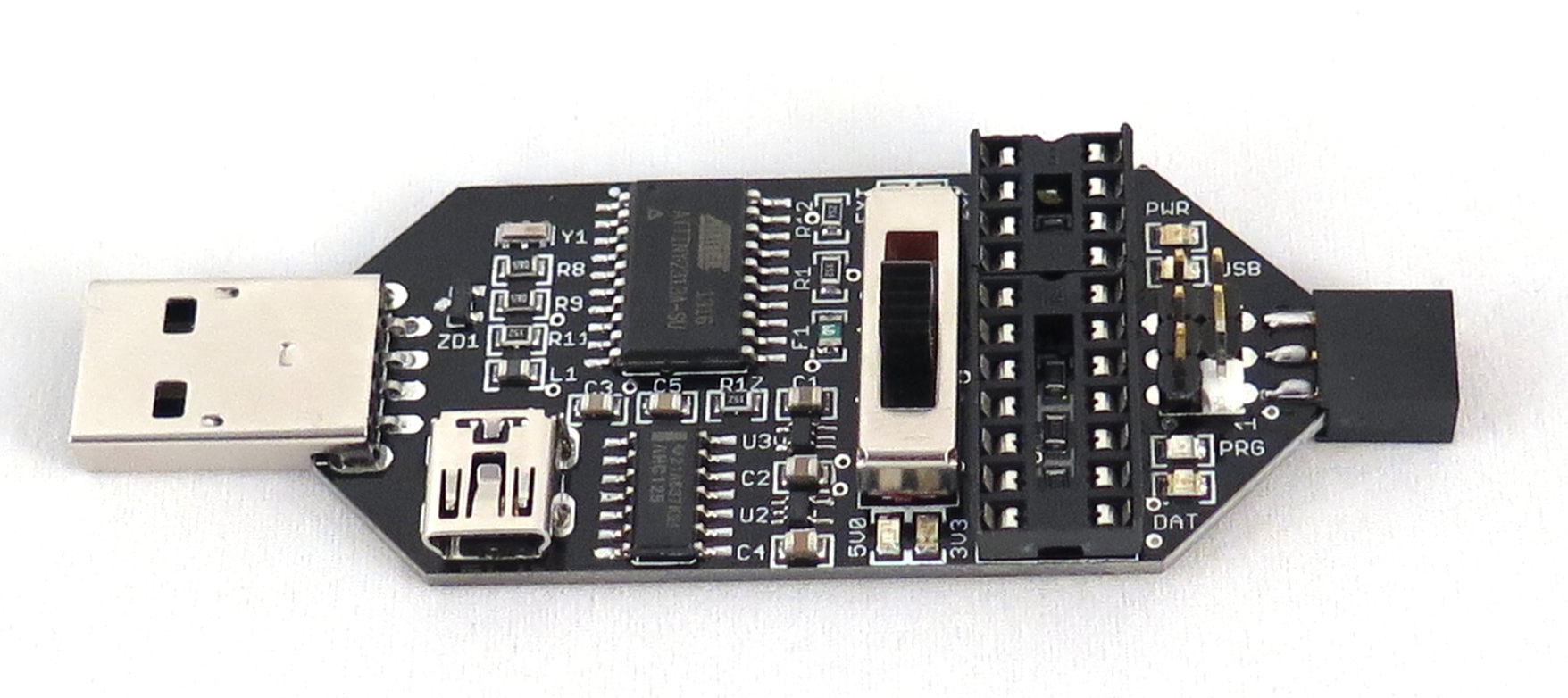

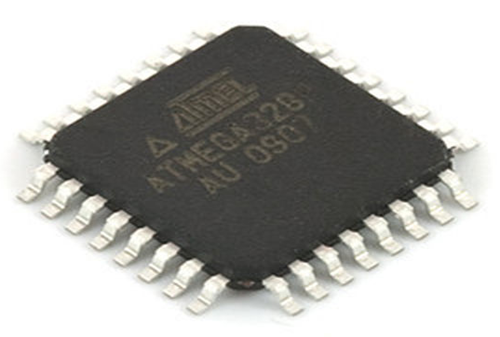

It’s still pretty easy to program, though, because there’s an IDE and libraries available to help you with the dirty work. Using the Arduino IDE on your computer, you write code in Arduino-flavored C++, cross-compile it for the Atmel AVR ATmega328 microcontroller on the board, and upload the resulting binary via a USB cable.

The Arduino is an extremely popular hobbyist board and there are a ridiculous number of tutorials, project descriptions, and forums to give you advice and inspiration.

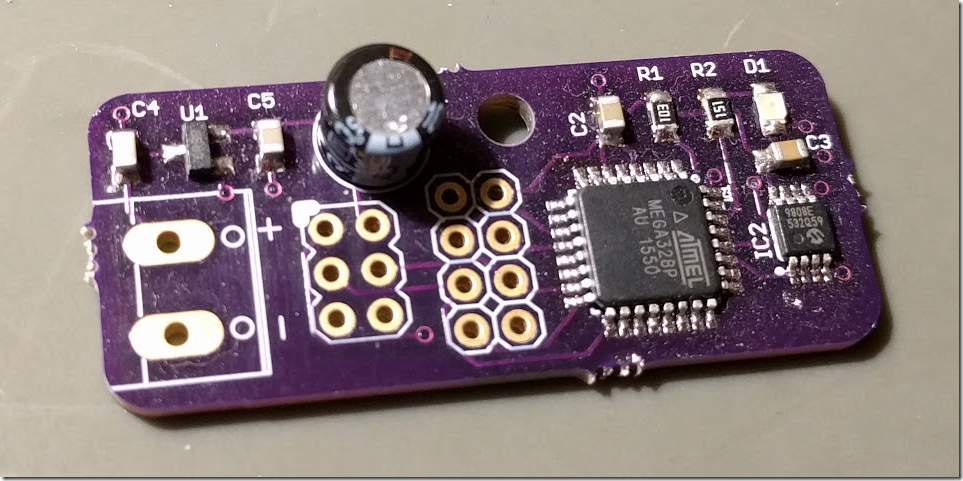

Bare microcontrollers

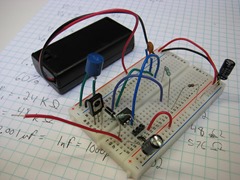

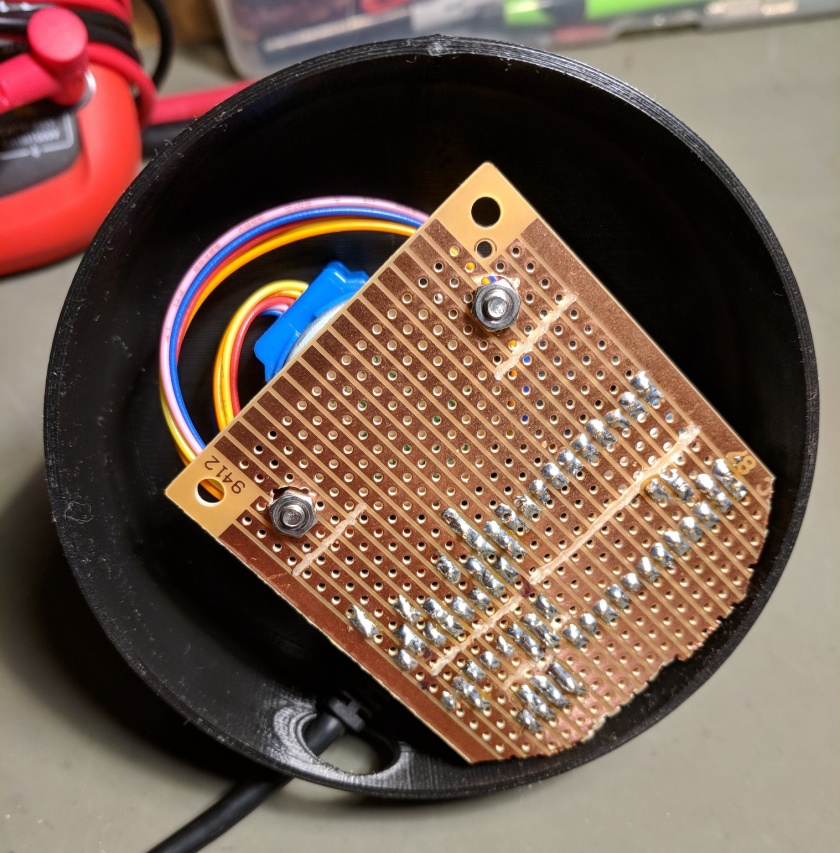

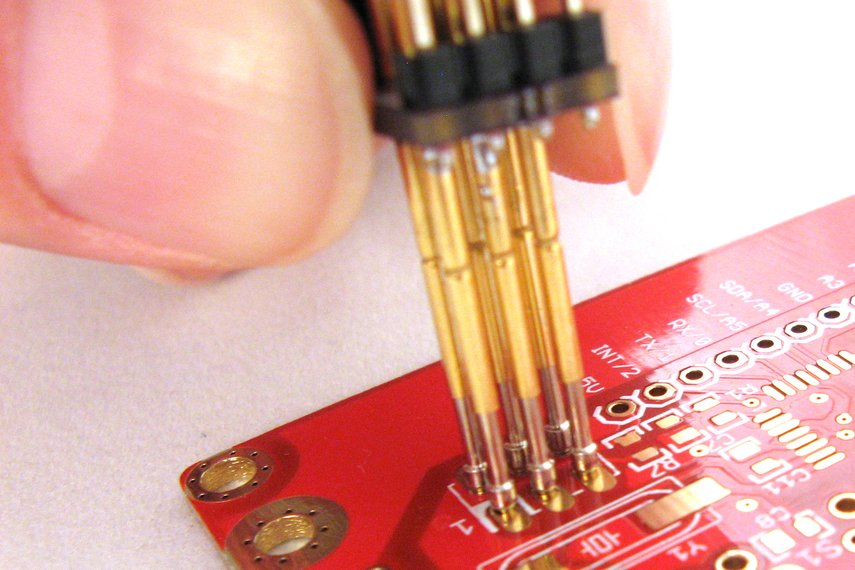

The Atmel AVR ATmega328 microcontroller that runs the Arduino is not a particularly modern chip but it’s well understood and a huge community has grown up around it. It’s quite inexpensive and easy to experiment with. It turns out that it’s not particularly difficult to buy the chip all by itself, whack it and a couple of other cheap components onto a breadboard, and make your own homebrew Arduino.

Furthermore, the AVR line of microcontrollers includes chips that are both larger and smaller than the ATmega328 used in the Arduino but they’re all software-compatible, so you can choose precisely the minimum amount of silicon you need to build your project. Using a bare chip in your project is a little more inconvenient than using an Arduino board but if you’re soldering stuff together anyway and you need it to be small, including an AVR chip directly in your circuit is the way to go. Besides which, soldering bare chips makes you feel like you’re actually doing electronics!

Analog circuits

So far, everything we’ve discussed is primarily centered around writing code for a processor of some kind with some ancillary circuitry thrown in. That’s a great way for a software developer to start but since my goal was to do something completely new, I needed to break out of the programming paradigm completely.

It turns out that there are all kinds of things you can do with just analog components and a breadboard; no digital code required. That was a revelation to me. I mean, duh, of course I knew that, but after 20 years as a software developer I had some pretty deep-seated biases toward digital logic. The first time I wired up a 555 analog timer chip to blink an LED and was able to change the rate of the blinking by merely swapping one resistor value for another, I realized that this was the “totally new” thing I’d been looking for. I still do a lot of stuff with microcontrollers in my projects but it’s become a game of sorts to see how little code I can get away with, with zero being the ideal. I’ve learned a lot about low-level electronics over the past year and I’ve barely scratched the surface. I’m having a ton of fun, though!

Tools and resources

There are several tools I’ve purchased and valuable resources I’ve found that have helped me in my learning. Below are some of the highlights. This is by no means an exhaustive list and there may well be even better choices out there, but these are the things that I found valuable to me.

Hobbyist stores and distributors

There are a lot of online stores out there that offer all kinds of electronics components. Some of them are good, a lot of them are sketchy. Adafruit and Sparkfun are two companies that I can highly recommend. They both carry high-quality components suitable for hobbyists, both carry lots of custom-designed components and boards to make building projects easy, and both publish a wide range of tutorials and project guides to help you use what you buy. Their prices aren’t the cheapest you can find, but I strongly recommend sending your business to these companies because they stand behind their products and are actively building up the hobbyist community. Just beware – browsing either one of those websites can be hazardous to your wallet. So much awesome stuff!

If you’re shopping for unusual components, or components with very specific characteristics, or a large quantity of components, then you might want to try a large distributor house. Mouser and Digikey are both well-regarded, reliable distributors that stock literally hundreds of thousands of different electronics parts. If you need it, they probably have it. Your biggest problem will be deciding exactly what you want to order. You want a capacitor? Mouser has 346,459 different kinds. You’ll need to get proficient with their catalog search tools and know how to read datasheets because there’s no handholding here.

Also be aware that these distributors focus on large-volume orders. They’re willing to sell you singles of most things but it’s not going to be particularly cheap. You need to buy in reasonable quantities to get discounts.

Electronic parts and kits

To get started, I purchased a couple of breadboards, the Beginner Parts Kit, and the Resistor Kit from Sparkfun. These are nice little kits that gives you a few of each of the most commonly-used components for beginning electronics projects. This is a great way to get jumpstarted with building simple electronics circuits to connect to your BeagleBone Black or Arduino. There’s lots of other options out there, of course, but I was happy with these.

When it was time to build a larger parts inventory, I was pleased with kits put together by Joe Knows Electronics that I ordered from Amazon. I have the resistor kit, capacitor kit, semiconductor kit, and LED kit and they’ll keep me going for a good long time.

Multimeter

A good multimeter is the first tool you should invest in as you pursue a hobby in electronics. A good choice is the Extech EX330. It’s reliable, has a good set of features, and is reasonably priced.

Soldering Station

I learned a long time ago that if you’re going to invest in equipment for a hobby, you should buy quality stuff that actually works well. There are lots of low-end soldering irons out there but most of them will simply cause you to be frustrated and maybe give up on the whole thing altogether. After some research I purchased an Aoyue 9378 soldering station that I’ve been happy with. There are several other good choices in this price range, too, but this one seemed to be recommended a lot.

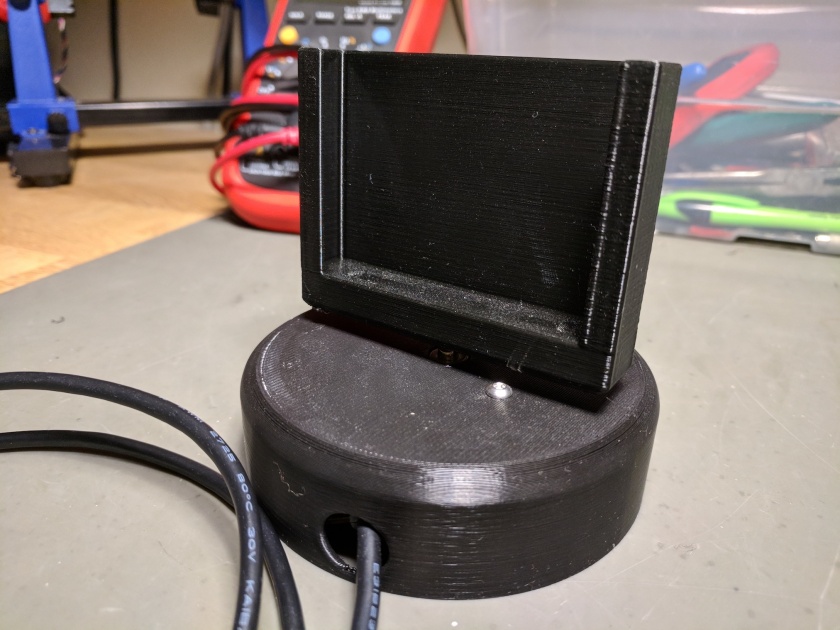

Power supply

One of the first “from scratch” projects you can build is a breadboard power supply to feed 3.3V or 5V power to your circuits. It’s fun and satisfying to build your own tools. However, when your needs expand to higher voltages or high current, and you need features like current-limiting to avoid blowing stuff up, then it’s time to get a real power supply. I purchased a Mastech HY3005F-3 power supply. It’s solid and reasonably priced. It’s not programmable or anything (that stuff is found in higher price levels) but it gets the job done.

Oscilloscope

An oscilloscope is an incredibly useful tool for understanding and diagnosing non-trivial circuits. Where a multimeter will tell you the voltage at a particular contact point right now, an oscilloscope allows you to see how it varies over time. This is the sort of tool where it’s hard to imagine how it would be useful until the first time you actually use one, then wonder how you ever got along without one. I chose the Rigol DS1102E and like it a lot. There are fancier ones available for more money but this one seems to do everything I need for now.

Blogs

There are a ton of great resources for the electronics hobbyist on the internet and I won’t attempt to list them all. One of them particularly stands out in terms of being both instructive and entertaining, though: EEVBlog. Dave has hundreds of video episodes covering everything from electronics fundamentals and theory to teardowns of various pieces of equipment to live repair jobs. I learned a tremendous amount just from watching how he uses his tools. I recommend starting with episode #168: How To Set Up An Electronics Lab.

Circuit simulation

Sometimes you don’t want to take the time to actually build a circuit on a breadboard to see if it will work. Or maybe you don’t have the correct parts at hand, or you’re afraid you might screw it up and blow up a component, or whatever. In such cases it’s very useful to be able to simulate your circuit ideas in software first before you commit to the real thing. There are software packages you can download for this purpose, but I’ve been pleased with CircuitLab.com. It’s not free but the user interface is very intuitive and it’s helped me better understand how transistors and opamps work because I can quickly set up various scenarios and see instantaneous voltage and current values, or chart them over time.

Go forth and learn!

So that’s a lot of high-level information about how to get started with an electronics hobby. You’ll notice that I didn’t discuss the “how-to” on anything, but a few web searches should turn up lots of resources for any particular topic that catches your interest. The most important thing is to actually do something. Start small, understand and master what you’ve purchased, then move on to the next step. Personally, I’ve found it to be very rewarding and I hope you will too.

![product_detail_black_sm[1] product_detail_black_sm[1]](https://saintgimp.files.wordpress.com/2014/07/product_detail_black_sm1_thumb.jpg?w=240&h=194)